Last Updated: September 22, 2025

Introduction

Artificial Intelligence (AI) is a branch of computer science that aims to create machines that mimic human intelligence. This didn’t originally mean creating machines that can think for themselves, but rather, creating machines that can be programmed to think or learn in a way that appears intelligent. However, there seems to be very little, or potentially absolutely no difference at all between “actual intelligence” (whatever that means) and “artificial intelligence” - more on this later.

The language I’m using could be seen as anthropomorphising, however, these models are reflections of some ways the human brain works and learns, and I believe the analogies using human-specific language to be well-accurate enough.

Why Does It Work?

The “intelligence” and “reasoning” abilities of any given AI system are not binary - AI is not either intelligent or not intelligent - these reasoning abilities exist on a sliding scale, similarly to humans. There is only so much reasoning a particular model can perform, and only so much the model can “remember” (or juggle in its “mind”) at any given time. An AI system’s intelligence and working memory size seem to be the constraints on AI ability, however, this is still very high-level, and still hasn’t really answered the question. Funnily enough, we are once again asking "what is intelligence?"

Technically, all these models do is predict the next word (actually “token” instead of “word”, but that’s not too important a distinction at the moment). That said, viewing these models as “only text prediction and nothing more” is majorly missing the point. These Natural Language Processing (NLP) models (like ChatGPT) have been trained on such a large amount of diverse text that they have learned and understood the inherent underlying logic and patterns of human language as presented through text. Language is the vehicle in which we humans use to convey our thoughts, reasoning, feelings, ideas, aspirations, and emotions - these are emissions and admissions of our own intelligence. Hence, intelligence is a fundamental requirement in creating the thoughts we have and the language we use.

Clearly, it seems that by instructing an AI to learn how to effectively model language, it must also learn how to effectively model intelligence itself to be able to excel at modelling language. Stemming from “only” an advanced text prediction algorithm, there is a veritable emergent “ghost-in-the-machine” with a surprisingly organic and authentic display of robust artificial intelligence.

What's On Offer

Large language model assistants keep expanding their skill sets—mixing multimodality, automation, and deeper reasoning. Here’s a snapshot of what the leading platforms offer right now:

-

ChatGPT (OpenAI)

- The Free tier keeps limited GPT-5 access, real-time search, file uploads, Projects, and standard voice, while Plus ($20/month) and Pro ($200/month) expand usage limits, unlock Deep Research, multiple reasoning models, and Advanced Voice with screen sharing plus early previews of the Codex agent.

- ChatGPT Business ($25 per user/month billed annually) and Enterprise build on that with unlimited GPT-5 messaging, connectors into company tools, SAML SSO, MFA, compliance alignments, and richer admin controls for governed deployments.

-

Microsoft Copilot

- Copilot’s free tier spans Windows, web, and mobile experiences, but Copilot Pro ($20/user/month) adds priority access to top models, 100 Designer boosts, and deep integrations across Word, Excel (preview), PowerPoint, Outlook, and OneNote.

- Microsoft 365 Copilot for organisations runs $30/user/month (annual) and layers secure Microsoft Graph grounding, Copilot Studio agents, enterprise analytics, and compliance controls across Teams, Outlook, Word, PowerPoint, and Excel.

-

Gemini (Google)

- Google AI Pro ($19.99/month) upgrades the Gemini app with Gemini 2.5 Pro access, Deep Research, a 1-million-token context window, Gemini in Gmail and Docs, and 2 TB of storage bundled into the plan.

- The AI Ultra tier ($249.99/month) adds Deep Think reasoning, Veo 3 video generation, Chrome task automation, larger daily Deep Research allowances, and other early experimental features aimed at production-grade creators.

-

Claude (Anthropic)

- Claude’s Free tier covers chat, code, and image analysis; Pro ($20/month or $17/month billed annually) increases usage, unlocks Projects, Research, and terminal access to Claude Code, while Max 5x/20x plans at $100/$200 per month deliver higher quotas and early features.

- Claude Team seats start at $25 per user/month (annual) with central billing, governance, and premium Claude Code options, and Enterprise adds SSO, SCIM, audit logs, enhanced context windows, and compliance APIs for regulated deployments.

-

Llama 4 (Meta) — Open Source

- Meta’s Llama 4 Scout and Maverick releases are open-weight, multimodal models powering Meta AI across WhatsApp, Messenger, Instagram, and Meta.ai with support for text, video, images, and audio.

- Maverick’s mixture-of-experts design keeps only 17 billion of its 400 billion parameters active per token, enabling a 10-million-token context window and single H100 deployment, with downloads available via Llama.com and partner hubs for self-hosting.

-

Grok (xAI)

- xAI has opened Grok 3 broadly—free users get 10 prompts every two hours, while X Premium+ subscribers ($40/month or $395/year in the U.S.) gain higher usage limits, an ad-free X experience, and early access to features like Voice Mode.

- Power users can step up to the standalone SuperGrok subscription (around $30/month) for DeepSearch, Big Brain reasoning, and unlimited image generation, or SuperGrok Heavy at $300/month for Grok 4 Heavy multi-agent previews and priority support.

The First Pitfall

The first pitfall is assuming every ChatGPT login delivers the same experience. OpenAI now differentiates sharply between free, paid, and workplace offerings—so match your use case to the right plan.

-

Unpaid / Free: The Free tier keeps limited GPT-5 access, search, voice, file uploads, and Projects, but throttles throughput and omits premium reasoning models—fine for occasional prompting yet easy to outgrow once workflows get heavier.

-

Individual Paid: Plus ($20/month) and Pro ($200/month) expand limits, unlock Advanced Voice with screen sharing, Deep Research, multiple reasoning models, and early previews like the Codex agent—exactly what you need when you start automating tasks or running longer research sessions.

-

Business & Enterprise: Business ($25 per user/month billed annually) adds unlimited GPT-5 messaging, connectors into internal tools, SAML SSO, MFA, and compliance guardrails, while Enterprise layers bespoke retention policies, broader governance, and dedicated support for regulated deployments.

Match the tier to the work you need to do—casual prompting, power-user automation, or governed teamwork—then pilot for a week before you roll it out widely.

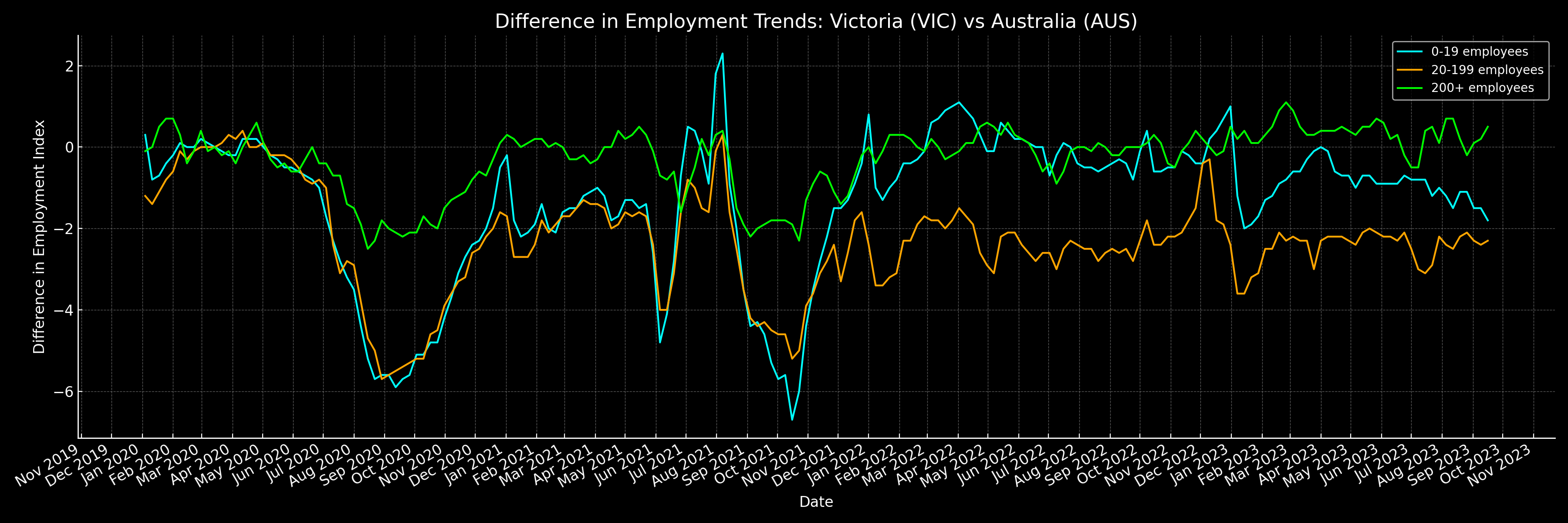

When we trialled ChatGPT Business, we dropped the ABS employment dataset into a Project, let the Codex agent build out the exploratory code, and returned to the automatically generated visualisation below—a reminder that the higher tiers bundle workflow and automation upgrades, not just faster replies.

I'll eventually come back to this page to write more, but for now I'll leave it here. Be sure to check out part 2.